Computer Vision (CV) is pretty awesome. It reminds me that while we humans can easily understand what we’re looking at, it’s way way harder for the computer to be at our level of understanding:

Us: We see a hand!

Computer: What’s a hand? I just see two blobs.

That’s where openCV comes in. It’s a C/C++/Python library developed by Intel, Willow Garage and Itseez. openCV contains a boat load of algorithm implementations for image/video processing. Do you want your computer to recognize a face? You can use openCV to do that. Do you want your computer to track your cat? openCV can help. Do you want your robot to recognize 3 differently coloured buoys and run into two predefined colours? openCV is the answer!

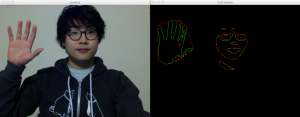

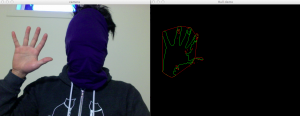

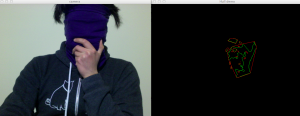

Currently, I am trying to build a hand detector/tracker. It’s more difficult than you might think it would be to implement! It takes quite a while to become acquainted with openCV and sample code looks like gobbledygook at first glance before you take it apart. Here’s my progress so far.

Some pretty good progress made so far but I still have a long way to go. In a nutshell, I first find bare skin by filtering the skin hues from each image frame. Then, I draw contours (green traces) and find the convex hull (red traces) of what remains from the filtering. As you can see, the filtering is not perfect and I still have to write code that makes the computer recognize a hand and not my face. I will be posting the code once I finish it!

Credits: openCV image from http://opencv.org