Spatial Audio Edition

A while ago, I gave a talk on The Audio Programmer meetup on how I built a spatial audio plugin which you can watch below:

The plugin (called Orbiter) is open source and can be found in Github. A pdf of the slides from the talk is available here:

The video covers the development process in more detail but some of the concepts in the talk are covered briefly in this post.

What is Spatial Audio?

Spatial Audio (SA) is the process of manipulating audio such that it is perceived to be coming from a certain direction in space.

The two audio clips below illustrate spatial audio in action (wear headphones!). The first clip is the original stereo mix while the second clip is a spatialized version of the mix:

Note that in the spatialized mix, the audio sounds like it is “revolving” around your head. As the audio revolves, you should be able to roughly perceive the direction of where the audio is coming from.

Humans are generally able to tell where a sound is coming from by using the various properties of audio that enter our ears. Spatializing audio requires using various techniques to recreate these properties.

What is Orbiter?

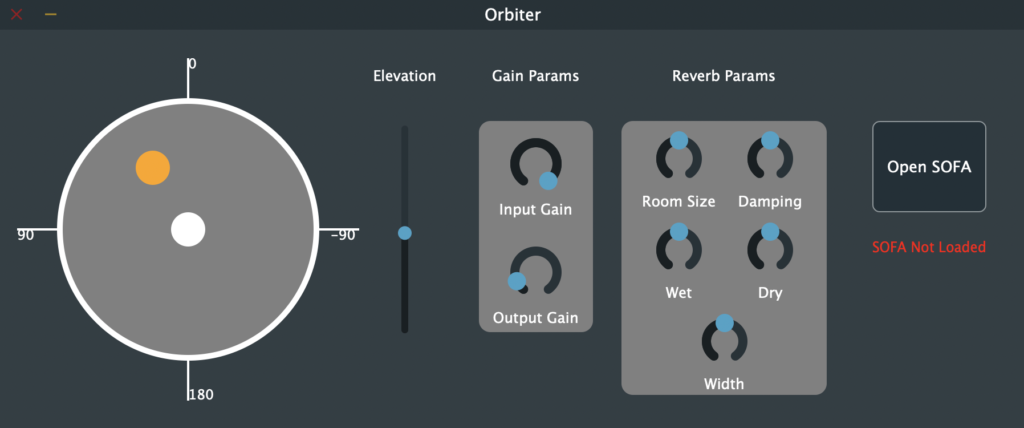

Orbiter is an experimental plugin created using JUCE that can be loaded into a DAW like Ableton Live and Logic Pro X. It will take an audio stream and make it sound as if it is coming from a certain direction.

The above screenshot shows the Orbiter GUI panel. The orange circle represents the audio source while the white circle represents your head. At the shown position, the resulting audio will sound like it is coming from the front-left.

Orbiter relies on the user to supply spatialization data that it can apply to the audio stream. The format of this data and the type of file it it is stored in are discussed in further detail below.

Why Make the Plugin?

There are a number of spatial audio plugins out there that, frankly, do the job a lot better than Orbiter can. Any professional audio production should be using those plugins rather than Orbiter.

For my experiments in spatial audio, I was writing scripts in Python to process audio offline. Eventually, I wanted the option to process data in real-time. Having never created a plugin before, I also saw this as the perfect opportunity to try creating one.

Binaural Audio Processing

There are a variety of ways to spatialize audio. The method implemented in Orbiter is called binaural audio processing.

While some spatialization methods rely on multiple speakers positioned in different locations, binaural processing spatializes audio through only your headphones.

To localize sound sources, our brains use various audio cues such as the sound’s intensity and time of arrival (ToA) at each ear. To briefly state the general ideas, sounds that arrive louder and sooner at one ear compared to the other will give the perception that the audio source is located somewhere around the side of that ear. More exact localization depends on the more subtle characteristics of the ToA and intensity.

Additionally, the shape of our ears will spectrally change the incoming audio, acting as a kind of filter. As we grow, our brain learns to associate these spectral characteristics, differences in ToA and intensities with different directions around our head.

It is possible to measure the filter characteristics of our outer ear by attaching special microphones in our ears, playing impulses from speakers placed in different directions and recording them. An impulse recording encapsulates the ToA and intensity information and is referred to as the Head Related Impulse Response (HRIR). Spectral information can be obtained by taking the Fourier transform on the HRIR to get the Head Related Transfer Function (HRTF).

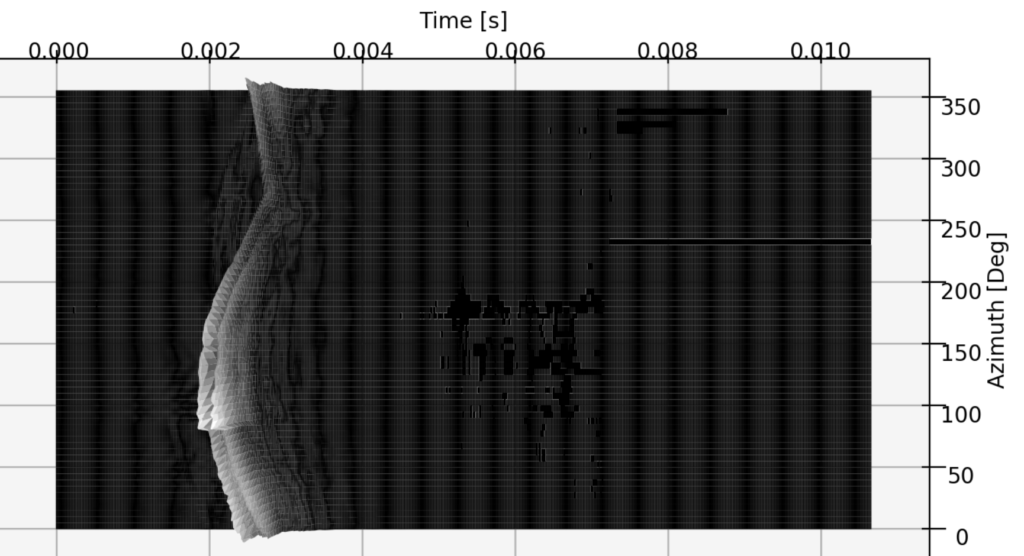

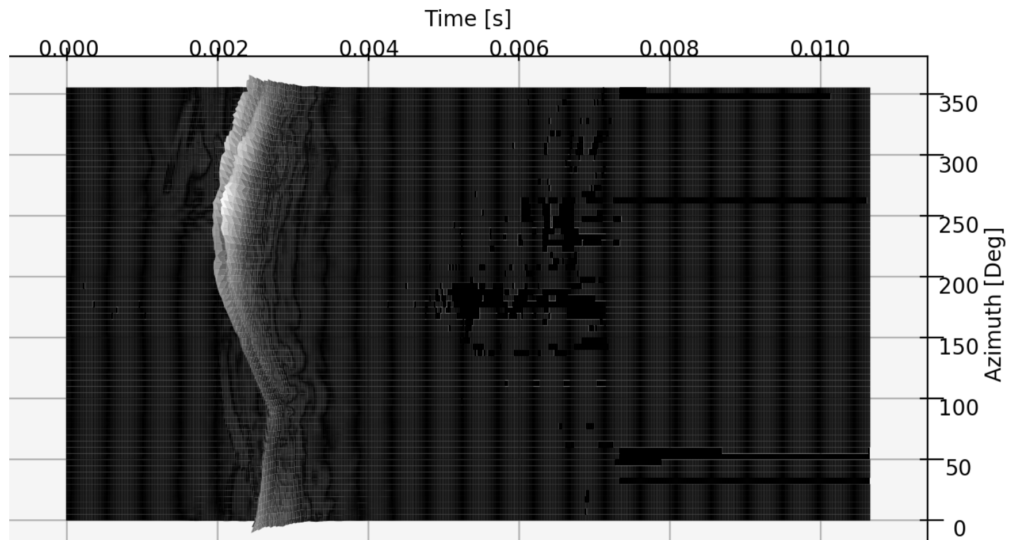

The above graphs show the collection of HRIRs measured from 0° to 355° for the left and right ear. At 90°, the audio source is facing the left ear. At this direction, the impulse arrives at the left ear sooner (around the 0.0025 sec mark) than the right ear (about 0.003 sec). The impulse intensity (the z-axis) is also greater at the left ear compared to the right ear.

When the angle (azimuth) is changed to 180°, we observe the opposite. The impulse reaches the right ear first and at greater intensity compared to the left ear.

Binaural processing applies the HRIR/HRTF to spatialize audio.

HRIR Storage

In order to be able to virtually position an audio source to different directions, we need HRIRs associated with those directions. One challenge is in how a collection of HRIRs (likely a big collection) should be conveniently stored and accessed.

One file format that stores HRIRs is the SOFA file format. This format not only contains the collection of HRIRs, but also contains necessary information about the measurement while the impulses were recorded (sampling frequency, number of measurements etc).

The Orbiter plugin reads SOFA files via a small library (created by myself) called libBasicSOFA.

Processing

Applying HRIRs (HRTFs) can be as simple as convolving HRIRs with the audio stream (really, no different from implementing FIR filters). However, due mainly to the fact that we want to be able to change the audio source position (and hence HRIRs), there are some extra steps that must be done to accommodate this.

Frequency Domain Convolution

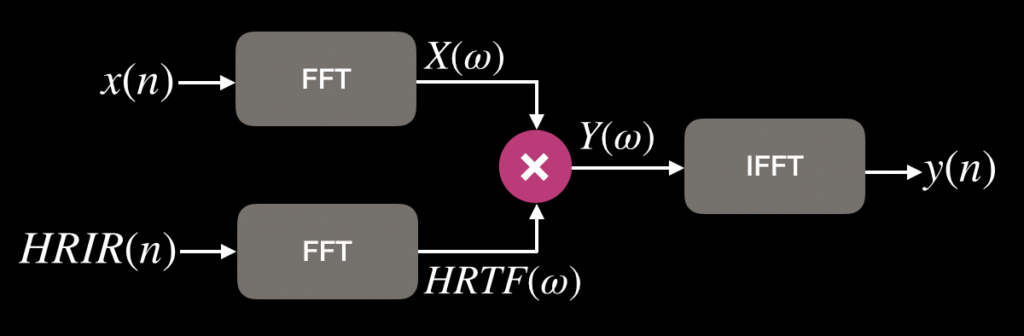

As HRIRs can be quite lengthy, time domain convolution can be an inefficient approach. A more efficient approach is to take the Fourier transform of the HRIR and input signal, multiply the two spectra and then take the inverse Fourier transform of the result to get the filtered time domain signal. This is often referred to as convolution in the frequency domain. The signal flow graph below illustrates this process.

Overlap and Add

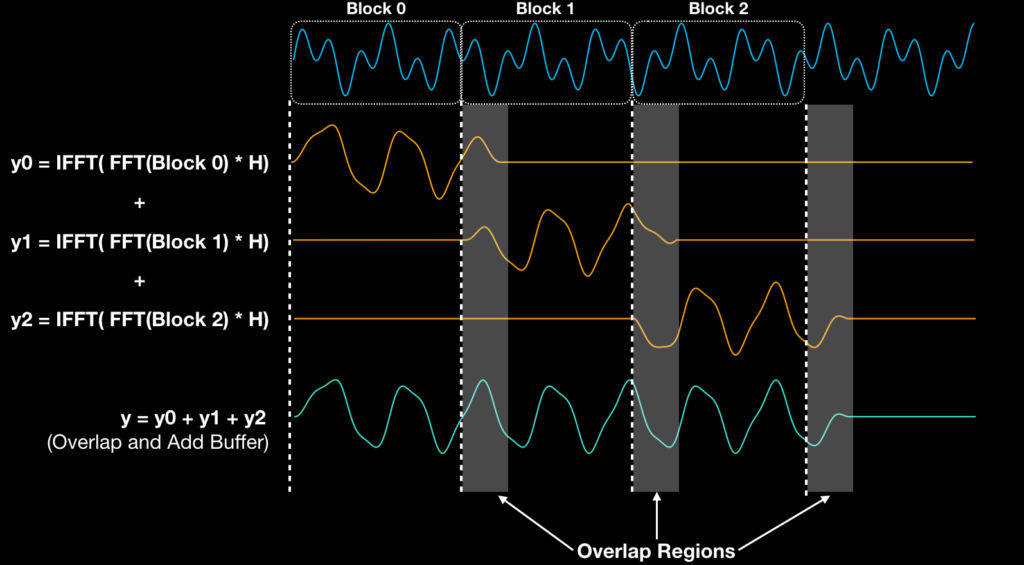

In a plugin, we are typically not given the entire input audio signal at once but instead, periodically provided blocks of input data. In JUCE, the member function processBlock() is called whenever a fresh batch of input audio is ready to be processed and is the starting point for applying HRIRs.

When processing in the frequency domain, we are applying the Fourier transform to “sections” (blocks) of the entire audio signal and processing them. It turns out that working with an audio signal split into blocks and processing them individually will eventually create the same result as if the entire audio input was processed at once.

For a given block, the number of processed samples will not be the same as the number of input samples due to how convolution works. There is a tail portion of the processed signal that is added with the first few samples of the next processed block.

The image below illustrates the overlap and add process.

Up until now, each unique block did not contain any signals from previous blocks but this is not always true. The next block can start from the middle of the previous block in which case, the next block is said to overlap with the previous block. This process is particularly useful for when the filter characteristics are changing, which is the case for the Orbiter (HRIRs are changing based on the source position).

It is also important to note that when overlapping in this manner, the audio input block must be windowed before being processed. Popular windows used include the Hanning window and Bartlett (triangular) window.

Crossfading

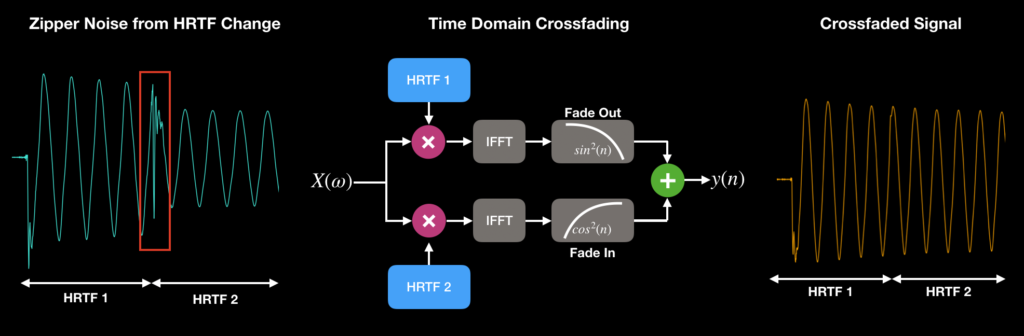

When the source position is changed, the HRIR in use must be changed to the HRIR associated with the new position. Simply changing the HRIR between blocks results in unpleasant zipper/click artifacts, thus calling for a need for a smooth transition between the previous HRIR and the new one.

One way to achieve this is to create two processed versions of one sample block. In one version, the old HRIR is applied and faded out using an envelope going to zero while the other version has the new HRIR applied but faded in. The two signals are added together and the result is a signal with reduced artifacts.

Adding Reverb

Binaural audio processed using HRIRs measured in anechoic settings tends to sound as if the audio is coming from the inside of the head. It turns out that the reverberant properties of a room play a role in the external perception of audio (i.e. making the audio sound like it is coming from the outside of our head). The Orbiter plugin uses a simple approach by mixing in a reverberated version of the input audio with the binaural version.

Final Notes

The Orbiter plugin is far from perfect. There are many factors in creating a good spatial illusion that Orbiter does not take into account, thus spatialization performance is rather lacklustre.

However, the point of this exercise was to try building a plugin and implement what I learned so far about spatial audio. It is very much an experimental playground. Improvements may be made to the plugin as I learn more.